In case you missed it, U.S. Senator John Hickenlooper recently took several actions to sketch a vision for the regulation of AI.

On Monday, Hickenlooper proposed a framework for federal AI regulation at the Silicon Flatirons Flagship Conference. Hickenlooper’s proposal focuses on three policy areas: 1) AI transparency and user literacy, 2) consumer data protection, and 3) international coalition building. He also proposed the development of standards for third-party auditors who would be able to audit and certify AI companies’ compliance with federal regulations.

Hickenlooper also sent two letters: the first, calling on the CEOs of X and Meta to respond to violent and explicit AI images generated online, and the second, urging the Department of Labor to prepare American workers to integrate with artificial intelligence in the workplace.

Check out what they’re saying:

Axios: Colorado leaders want a crackdown on AI and deepfakes

Driving the news: U.S. Sen. John Hickenlooper, who is backing legislation in Congress to increase accountability on AI creators, stressed the current “historic inflection point” on the issue during a speech Monday at a conference hosted by the University of Colorado Boulder’s law school.

The Democrat said he is worried about negative impacts on certain segments of the workforce, as well as the impact on society at large.

What he’s saying: “The biggest question we should be asking ourselves today is if we want to recreate the social media self-policing tragedy with AI,” Hickenlooper said at the conference, referring to exploitation of children and suicides attributed to platforms like Facebook and TikTok.

- “And whether these AI companies should be shielded from legal liabilities if they aren’t doing enough to prevent the harms their systems could create.”

Axios: Everybody wants to audit AI, but nobody knows how

Some legislators and experts are pushing independent auditing of AI systems to minimize risks and build trust.Why it matters: Consumers don’t trust big tech to self-regulate and government standards may come slowly or never.

The big picture: Failure to manage risk and articulate values early in the development of an AI system can lead to problems ranging from biased outcomes from unrepresentative data to lawsuits alleging stolen intellectual property.

Driving the news: Sen. John Hickenlooper (D-Colo.) announced in a speech Monday that he will push for auditing of AI systems, because AI models are using our data “in ways we never imagined and certainly never consented to.”

- “We need qualified third parties to effectively audit generative AI systems,” Hickenlooper said, “We cannot rely on self-reporting alone. We should trust but verify” claims of compliance with federal laws and regulations, he said.

Politico: Hickenlooper’s AI Thoughts

Sen. John Hickenlooper (D-Colo.) endorsed a national data privacy law, workforce training, AI auditing standards and more in a framework he revealed at a Silicon Flatirons conference on Monday. Hickenlooper is chair of the Senate subcommittee on Employment and Workplace Safety, which oversees legislation about AI’s impact on the labor force — a hot-button issue for multiple Congress lawmakers. His public positions on AI issues come as Senate Majority Leader Chuck Schumer tries to build bipartisan consensus on the Senate’s AI legislation priorities.

Politico Morning Tech: ONBOARDING AI INTO THE WORKPLACE

Sens. John Hickenlooper (D-Colo.)and Mike Braun (R-Ind.) sent a letter to acting Labor Secretary Julie Su, seeking information on how DOL is working to ensure the workforce is prepared to embrace AI.

“Artificial intelligence is reshaping how we interact with every part of the world,including our workplaces,” Hickenlooper and Braun wrote.“Responding to this change and effectively preparing our workforce for the jobs of the future will require a coordinated, thoughtful effort across government and the private sector.”

President Joe Biden’s AI executive order directed DOL to evaluate federal agencies’ ability to aid workers whose jobs are disrupted by AI and develop best practices for employers looking to implement AI technologies.

Hickenlooper and Braun want to know how DOL is progressing on those tasks, including how it plans to improve workforce training on AI and how the department’s guidelines will keep pace with the advancement of AI. The senators also want to know if there are any existing federal laws that Congress may need to update “to address the growth and advancement of AI in the workplace.”

Sen. John Hickenlooper (D-CO) is offering a “framework” for regulating artificial intelligence based on creating an auditing system for the development and use of safe AI technologies, with a focus on transparency, protecting consumer data and building international coalitions.

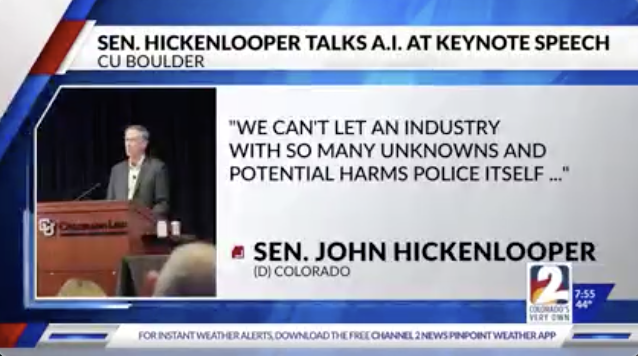

“We can’t let an industry with so many unknowns and potential harms police itself,” said Hickenlooper in a Feb. 5 keynote speech at the Silicon Flatirons Flagship conference. “We need qualified third parties to effectively audit generative AI systems and verify their claims of compliance with federal laws and regulations,” he added.

…His proposed legislation would require that AI systems are “transparent about the data models they are trained on and the personal data the companies collect. Whether it is determining when consumers are seeing AI-generated images or when an AI system is making hiring decisions, consumers deserve to know,” according to a statement from his office.

WATCH: CW Denver: Hickenlooper Lays Out AI Framework

In the wake of a disturbing incident involving sexually explicit AI-generated images of Taylor Swift that went viral on X (formerly Twitter), U.S. Sen. John Hickenlooper (D-CO) has intensified calls for more stringent oversight of social media platforms, stating: “Today’s model of self-policing for online platforms is not enough to avoid putting people’s children, teenagers, and loved ones at risk.”

Hickenlooper’s letter last week to the CEOs of X and Meta states that current content moderation practices are insufficient, particularly the slow response to serious safety risks. In the letter, he outlines a set of questions aimed at understanding the platforms’ procedures for addressing non-consensual explicit images and deepfakes, which are fake videos, images, or audio generated by artificial intelligence.

The Taylor Swift deepfakes, which took 17 hours for the platform to address and remove, have not only elicited a direct response from Hickenlooper but also aligned with a broader, critical examination nationally of social media’s impact on user safety.

…Hickenlooper’s letter, however, calls for concrete actions beyond apologies, pressing, “Everyone should feel heard when they raise a complaint with a platform. Responses to minors’ safety must meet a much higher sense of urgency.”

###